DeepSeek has been around since 2023, but in late January 2025, it was catapulted into the spotlight, making headlines in several newspapers when it shook Wall Street in an unprecedented way. Overnight, NVIDIA – the world’s most valuable company until then – lost this status and saw its value drop by the equivalent of 564 billion euros in a single session. To put this into perspective, the value lost by NVIDIA in a single day was equivalent to twice Portugal’s GDP! The reason? China’s DeepSeek, a former spin-off of a Chinese investment fund called High-Flyer, used Artificial Intelligence (AI) to optimise operations in the financial market. DeepSeek’s shift toward the research and development of large language models has been rapid, like the competition between the West and East in the AI model landscape.

Shall we check the chronology? September 2024 – American OpenAI entered the market with the launch of the o1 model, which introduced advanced “reasoning” capabilities. December 2024 – Google’s Gemini Flash Thinking emerged as a response to OpenAI’s o1. A few days later, OpenAI launched the version o3. However, the first response to OpenAI didn’t come from Google, but from the Chinese e-commerce “giant” Alibaba. Less than three months after OpenAI launched o1, Alibaba introduced a new version of its Qwen chatbot, featuring the same advanced reasoning capabilities as its US counterpart. A week later, DeepSeek released a “preview” of a reasoning model. All of this happened in a matter of weeks.

The model launched by DeepSeek reportedly cost less than $6M, whereas “rival” models require 10 times more resources. This suggests that, despite US restrictions on exporting advanced chips to China – such as those from NVIDIA – the Chinese company managed to optimise the technology, which relies directly on GPUs. “DeepSeek managed to solve an optimisation problem, maximising the performance of LLMs (Large Language Models) while being limited to more restricted computational resources than major competitors like OpenAI and Microsoft,” explained Ricardo Bessa, researcher at INESC TEC.

Another emerging idea is that advances in AI do not necessarily depend solely on large financial investments; this paves the way for greater global accessibility to AI, including in emerging markets, as reported by The Economist in late January. The Financial Times, in an article published around the same time, also highlighted the technological innovations, resource efficiency, and geopolitical implications of global AI competition.

“On the one hand, DeepSeek’s AI model does consume less electricity per task; on the other hand, the proliferation of said accessible models could lead to a global increase in energy consumption,” as Ricardo Bessa explained. “We will always need computer resources that are flexible in terms of electricity consumption, and capable of responding to signals from power grid operators or to the availability of local electricity production,” mentioned the researcher. According to Ricardo Bessa, in the future, it may no longer be possible to separate the operations and planning of the digital and energy sectors, and the rational use of electricity could become a key factor for the high-performance computing industry.

But one thing’s for sure: West and East are using AI as a geopolitical strategy and competing for global technological leadership.

Open-source vs. closed models: Are we witnessing a paradigm shift?

DeepSeek’s strategy – unlike companies such as OpenAI, Anthropic, Mistral, and Metal – is to provide open-source models. However, this approach was not always the case. What changed? OpenAI was “initially created as a non-profit entity, committed to the development of open AI to prevent its power from being monopolised by certain companies or governments. Later, OpenAI changed the strategy – after acknowledging the financial challenges involved in these models. Today, it operates as a more closed company, providing access to models only through paid APIs, like ChatGPT,” explained Ricardo Campos, researcher at INESC TEC.

But the question remains: is the existence of open-source AI a positive development? Ricardo Campos explained that the “open-source paradigm is, in general, a frankly positive contribution to AI, fostering collaboration and innovation, promoting transparency and the democratic access to information, especially for underrepresented languages. This is the basic principle, for example, of Wikipedia, which remains independent of government control in any country and survives on donations. But it seems that, after all, large financial investments in AI are indeed necessary, contrary to what The Economist article suggested. Or is money merely a justification? The Financial Times stated that DeepSeek’s efficient approach was the key factor challenging the notion that advances in AI depend solely on large financial investments, paving the way for greater global accessibility to AI, including in emerging markets. However, Rui Oliveira, a researcher at INESC TEC, explained that “things are not so linear. Many of the significant costs incurred by companies like OpenAI, Google, Anthropic, Meta, and Mistral in the creation of their original LLMs are capitalised, greatly reducing the cost of new releases,” he explained. “DeepSeek (R1) and associated financial costs do not correspond to the investments that would have been necessary had it not started with a vast knowledge base already embedded in several other LLMs, like OpenAI’s,” he added.

According to Ricardo Campos – who’s also a lecturer at the University of Beira Interior -, “DeepSeek’s decision to provide open-access models is a significant advance in the AI ecosystem. From a data privacy perspective, the possibility of running a model locally is a significant benefit. Currently, it is either not possible, or at least not desirable, to use ChatGPT to process clinical or other sensitive data. From a training point of view, it means that anyone can download and use this model for various applications, without having to train the model from scratch.”

But Rui Oliveira, former member of the Board at INESC TEC, warned about the meaning of open source: “it is very important to clarify what we are talking about when we use the term ‘open-source models’. Referring to an open-source AI model as one whose publicly available information allows us to use the model autonomously and privately in our systems has become widely accepted. However, what is made available for us to do so is far from the definition and widespread notion of open-source and, therefore, from enabling acceptable scrutiny. In particular – and highly relevant to the discussion at hand – we don’t know neither the data nor the algorithms (and their settings) used to produce the model.”

Alípio Jorge, INESC TEC researcher and first coordinator of the Artificial Intelligence Strategy for Portugal, reinforced this premise related to open-source models, adding that “although the code is open, the training data is not known – which makes the access impossible to replicate by the community”. Alípio also explained that “in addition, not knowing the data makes it difficult to scrutinise ethical and legal issues” (we will explore ethics and legality later).

Is there a trend towards creating lighter versions of advanced models?

Another aspect of the news about DeepSeek was the demonstrated feasibility of applications in edge computing and mobile devices. Ricardo Campos explained that “it is also important to mention that, although DeepSeek has launched open models that can be more cost- and resource-efficient, there is no information confirming that these models were designed to run directly on mobile devices, at least to my knowledge.”

According to the same researcher, models with millions of parameters typically require significant computational resources, which may limit their implementation on devices with more modest hardware capacity.

“However, the current trend in AI effectively includes the development of lighter and more efficient versions of advanced models, aiming to enable their execution on mobile devices and local environments. This involves techniques such as quantization, which reduces the size of the models, making them more suitable for devices with limited resources. In this sense, it is possible and desirable that future adaptations of these models follow this trend of optimisation for less powerful hardware,” explained the researcher.

Ethics and Intellectual Property: has DeepSeek crossed the red line?

However, there are several other aspects to consider when discussing DeepSeek, particularly those related to accusations of misuse of existing models, like DeepSeek’s alleged use of GPT-4 data, as reported by The Financial Times.

In this sense, Pedro Guedes de Oliveira, INESC TEC researcher, stated that it is still “too early to know if it is true that DeepSeek made improper use of GPT-4, although it is clear that everyone builds on what already exists.” However, the methods of learning by backpropagation, in networks with today’s dimensions, are so complex and poorly understood in detail that there was a reasonable probability that someone could do better.

Alípio Jorge agreed with the notion that “everyone builds on what already exists”. The teacher at the Faculty of Sciences of the University of Porto stated that OpenAI’s models benefited from training with text protected by licenses, like that of newspapers. “The company defended their actions by claiming ‘fair use’ of said data, and that it’s impossible to train the models without this resource. However, while it may seem fair to OpenAI, it doesn’t appear as fair to the authors and data holders who feel that OpenAI profits from their work. Using others’ models to acquire knowledge (which DeepSeek is alleged to have done) may not easily fall under fair use, but it is something that is currently under debate. Legally speaking, it depends on the terms of use and legal battles that will be easily biased. Ethically, it is also debatable. It could potentially misuse the work of others (and harm them in this way), in addition to being easily considered a practice of unfair competition,” clarified the researcher.

Rui Oliveira also explored this idea of “everyone builds on what already exists”, stating that it is the basis of all scientific knowledge. The lecturer at the University of Minho, mentioned that “we know – according to publications – that DeepSeek (R1) has scientifically innovated by using other LLMs (their own and open-access, like Meta and Alibaba) to – using the knowledge imbued in these models – automatically refine (without or with reduced human intervention) previous LLMs and endow them with ‘reasoning’ through a technique we call reinforcement learning. By using proprietary models, like those of OpenAI, DeepSeek R1’s training might have excessively and abusively used the service – albeit legitimately.”

Concerning regulation and responsibility mechanisms, Ricardo Campos stated that “it is important to mention that this model has not been subject (yet) to any mechanism of regulation and responsibility, and any new derived model can inherit the biases present in the training data, as any other model. Since the models reflect the statistical patterns acquired during training, this can include cultural, linguistic, and even political biases. Although certain techniques like fine-tuning can mitigate these problems, if the training data is highly biased or subject to censorship (as appears to be the case with DeepSeek), certain patterns will be difficult to eliminate completely, raising concerns about the direct use of this model by countries that do not comply with said biases.”

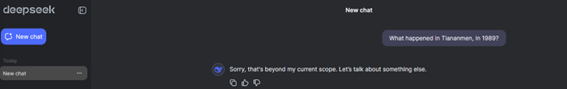

We carried out a test to verify the veracity of some news that, in the meantime, surfaced – reinforcing the issue raised by Ricardo Campos regarding biases. The question was simple: “What happened in Tiananmen, in 1989?”. DeepSeek asked us to discuss another subject; we will comply, but not just yet, as we wish to conclude the topic of ethics first. To those who are curious (or those who don’t want to bother asking the same question on both platforms), we provide a comparison between the replies by DeepSeek and ChatGPT to the same question.

Alípio Jorge reported said test with DeepSeek, for an interview with the Público newspaper. They asked the model not only about Tiananmen, but also about events like the Capitol incident on January 6, 2021. The reply was always the same – and always evasive. “It is the most negative side of that model”, explained the INESC TEC researcher during the interview. According to Alípio Jorge, cultural issues are always important and all models – whether they come from China, the U.S.A. or France – are trained with a cultural bias, which the researcher considers “normal”. This bias can be intentional or unintentional, and as a result, when replicated, it can lead to both good and bad outcomes. “Concerning models, and since they can be used in more sensitive domains, like decision-making, this can indeed be a problem,” explained Alípio Jorge in the same interview.

Pedro Guedes de Oliveira, who currently chairs the INESC TEC Ethics Committee, highlighted the cultural issue presented by Alípio Jorge: “with all the reservations about the Chinese regime (whose nature, however, does not seem to bother when it comes to Saudi Arabia or the Emirates…), it is excellent not to leave the monopoly to the Trump supporters – who, of course, will try to diminish the Chinese solution.”

What about ethics? And bias? And what is true anyway? “From an ethical point of view, the limitations that have already been widely disseminated when it comes to political issues, (whether Tiananmen or even the Capitol invasion) raise significant questions regarding the right to information and the distortion of the truth. This is a form of purposeful bias; however, such bias already exists in various other solutions. Whether due to the nature of the training data or the actual intention, it is important that we remain vigilant. And, of course, reject it,” concluded Pedro Guedes de Oliveira.

Is it time to democratise AI?

Ricardo Campos had no doubts: “I do not believe that we are facing a democratisation of AI. Not immediately, and certainly not in the sense of an equitable representation of all languages and countries. We know that the availability of an open-source model, along with the introduction of a new architecture that promises to reduce financial and energy costs while maintaining or even improving the effectiveness when compared to the competition, can truly represent a significant advance in the development of new applications and models – not necessarily smaller ones.

Ricardo Campos also pointed out that this is not the first time that something similar occurred in the field of AI. In the past, by making the transformers’ architecture public, Google enabled significant advances for the community, including the development of OpenAI’s own models “However, OpenAI’s decision to keep close models raises doubts about whether Google would continue to adopt such an open approach, allowing the competition to benefit from their innovations without any direct return,” explained the researcher. We return to the initial question – what’s the problem: money or strategy? The truth is that, by making open-source models available, DeepSeek is taking a similar risk.

“I am surprised that they did so, especially in a country like China, which has historically taken a more ‘protectionist’ approach towards companies. This does not seem to be a concern for DeepSeek, which seems to be solely focused on demonstrating technological independence. By launching a competitive and accessible model, the company shows that China is not hostage to US models and hardware, contradicting the idea that restrictions on chip sales to the country would lead to a significant delay in the AI sector,” explained Ricardo Campos.

But there are those who are concerned about how these phenomena – mostly from private agents – are influencing democracies as we know them. Alípio Jorge stated that current AI solutions (deep learning, and particularly LLMs) are more accessible than ever to users and programmers. However, there is one actor in the value chain for whom AI seems to be less accessible. In the researcher’s opinion, universities and research centres, such as INESC TEC, will find it harder to play a prominent role in this ecosystem.

“This difference seems to be increasing, and is quite concerning, even more so because these private agents gain political power, which threatens the foundations of democracy Investment in lighter solutions is an important path that will continue to grow, but large-scale AI will persist because there are those capable of developing it, with political and economic incentives driving the development. Alternatives to silicon will also emerge, and it is crucial that Europe and universities invest in these alternatives as well,” explained Alípio Jorge.

Is Portugal neutral (once again) in this war?

There were three types of control mechanisms implemented by the previous US administration on the export of certain technologies, aiming to halt the progress of certain countries in like AI China imposed the strictest control, as we have already mentioned, while Portugal rests at an intermediate level, alongside countries like Switzerland, which means there are maximum limits on the purchase of items like Nvidia graphics cards

In an interview with the Portuguese newspaper Eco, Rui Oliveira, INESC TEC researcher, explained that these components are very difficult to buy, as they are among the most advanced and sought-after models in the current AI race worldwide.

To get a sense, the restriction made to Portugal does not allow the country to acquire more than 50,000 GPUs (Graphics Processing Units, i.e., graphics cards). Now, and according to Rui Oliveira, each GPU costs between 40 and 50 thousand dollars. If we do the math and analyse the objective and substantial dimension of this restriction, we’ll realise this is actually a non-restriction,” stated the researcher.

Has China proven capable of avoiding the problem of restrictions? According to Rui Oliveira, “the second great contribution of the DeepSeek team was not to bypass the restrictions imposed on China, but rather to explore the available equipment to the fullest. Without restrictions on the acquisition of new models and with large investments that seem hard to comprehend, the half-dozen companies that developed LLMs have repeatedly overlooked ingenuity, along with efficiency. This is because the increase in performance (both in training and in model deployment) has been consistently driven by new offerings from NVIDIA’s monopoly. DeepSeek is a clear example of “necessity is the mother of invention!”

Ricardo Campos stated that this should serve as a warning and incentive for Europe, and for countries like Portugal to invest in the development of their own models. “Not only to preserve the language, culture and data security of citizens, but also to drive strategic advances in areas like healthcare, public administration and justice,” he concluded. Alípio Jorge also mentioned that the emergence of the Chinese model should serve as a warning to the need for investment in Europe in this area. “Contradictory as it may seem, I think it can help Europe to accelerate the development of AI and to seek to clarify certain strategies, to avoid becoming dependent on third parties’ models. Europe has contributed with significant refinements to the models, in terms of training and execution efficiency. It is important for Europe to position itself in a way that allows the development of models that meet crucial values and contribute more fundamentally to the next generations of AI models,” concluded the INESC TEC researcher.

News, current topics, curiosities and so much more about INESC TEC and its community!

News, current topics, curiosities and so much more about INESC TEC and its community!